Speed-Building an AI 'Will My Boss Hate This?' Detector, Using Lovable

The real revelation isn't the tool itself - it's that a comms professional with no coding experience can build something this functional in three hours. The barriers between "I wish this existed" and "I built this" are crumbling.

From idea to working app for an AI-Powered Communications Studio within the time it takes to watch Oppenheimer - bugs, breakthroughs, and all. Here’s what you can build in three hours.

The Problem We're Trying to Solve

Every comms professional has sent something that landed badly with a key stakeholder: even the battle-hardened ones. Hours of drafting, endless tweaks… and you’re still blindsided by someone’s reaction.

So, what if you could test and refine your message before hitting send? Imagine running your content past a digital stand-in who’s tuned to the same tastes, quirks, and priorities as your boss, client, or that tricky stakeholder. Someone (or something) who gives honest, fast feedback—every time.

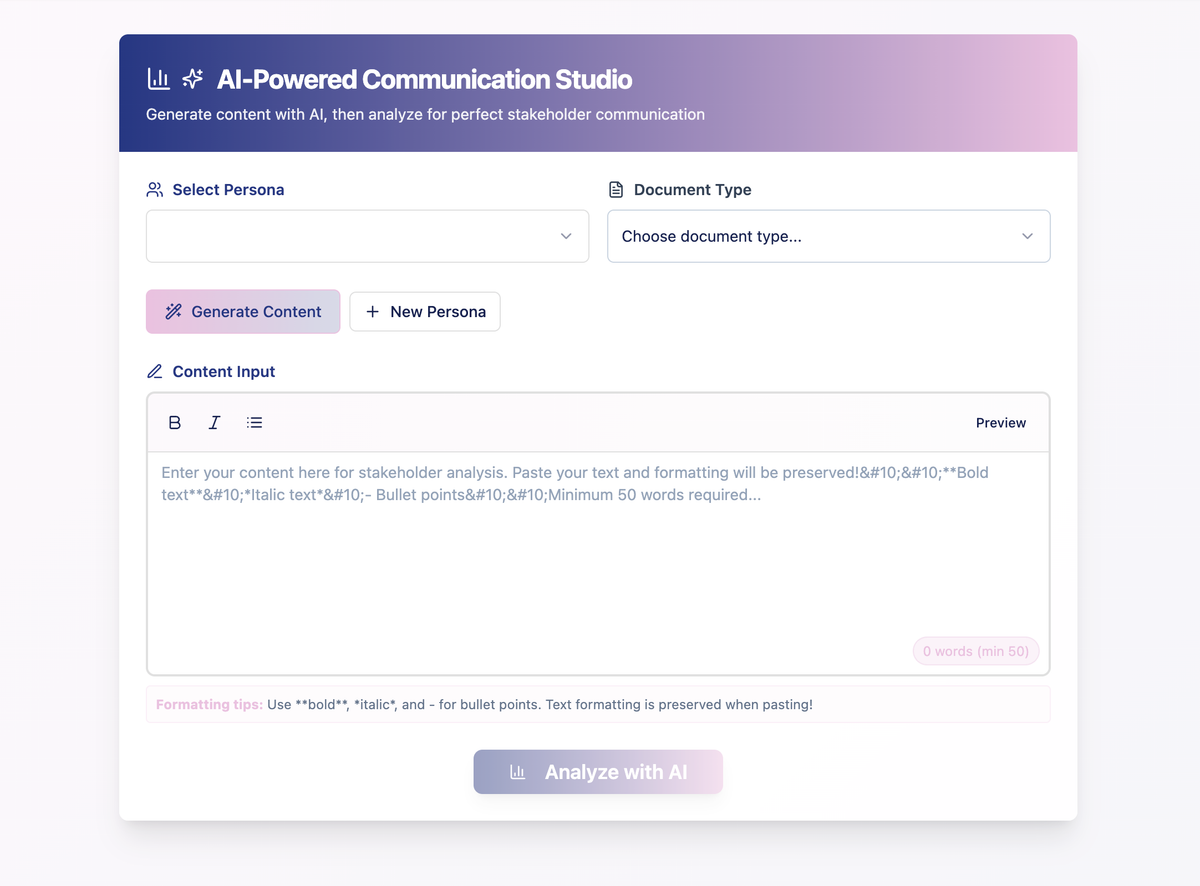

Enter the Faur Stakeholder Lens: An AI tool that analyses your content through the eyes of different stakeholders before you hit send. Beyond a few generic archetypes, the aim is to build specific, realistic AI “doppelgängers” for your actual recipients.

Why it matters: If functional, it will save you face, save time, and slash those painful revision cycles by anticipating feedback before it happens.

Reality check: This won’t replace human judgement, but it might catch those “obvious” misses that haunt you at 3am. (Or is that just me?)

The Plan (And Where It Could Go Wrong)

Our hypothesis: AI can simulate different stakeholder perspectives well enough to provide useful pre-flight checks on comms.

Success looks like:

- Without coding or using expert technical knowledge, building a functional app with some reasonably complex features. (For example, I would never have the slightest idea of where to start with building a sign-in profile for a website)

- Accurately flagging negative reactions to risky or poorly written content

- Giving genuinely distinct feedback for different personas (e.g. CEO vs Legal vs Employee Rep)

- Suggesting actionable improvements, not just “generic” criticism

Failure, on the other hand, shall resemble: Every piece of content gets similar scores regardless of quality, or if the personas all sound the same.

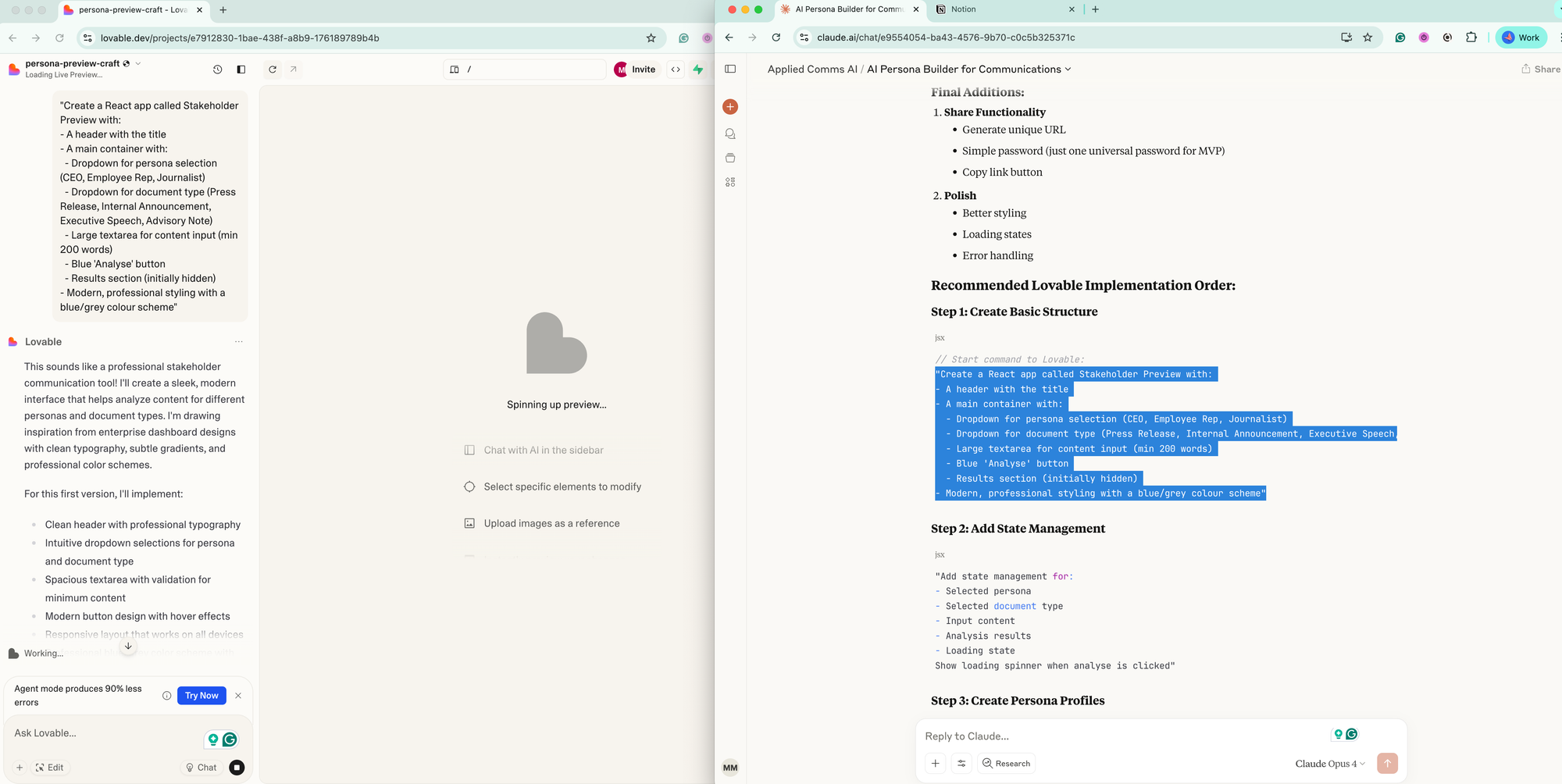

Technical approach:

- Platform: Lovable (AI-powered app builder)

- AI model: Claude Opus 4 (and a bit of OpenAI GPT-4)

- Additional tools: Supabase for data (I signed up to this through the recommendation of Lovable, and began using during the course of the experiment!)

- Key features: Persona-based analysis, content generation, amendment suggestions

The Process

Hour 1: From No-Code Prompts to Prototype

- 15:03: Started with a Claude-generated prompt for Lovable. Immediate error (“UserTie is not a valid export”). Welcome to no-coding.

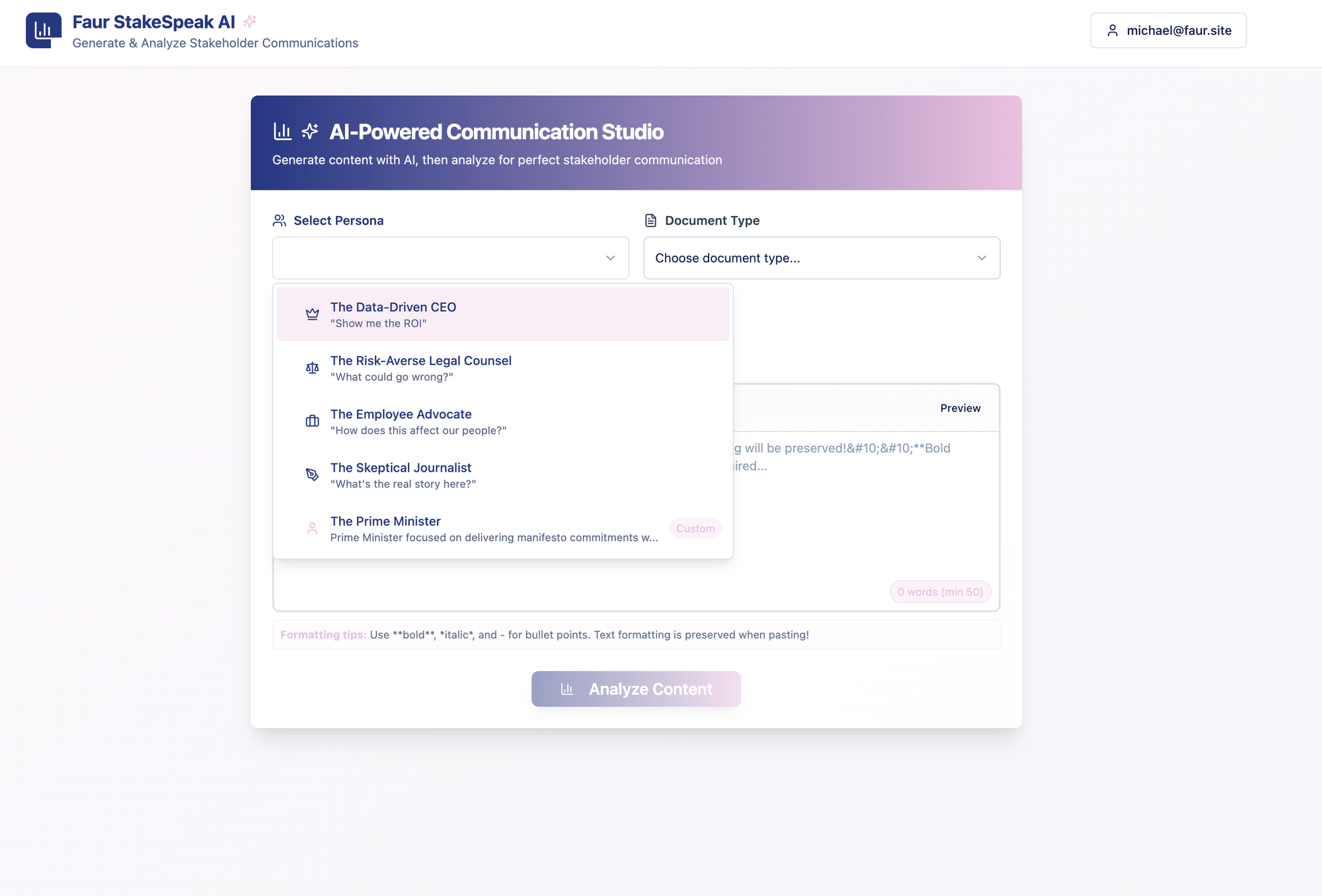

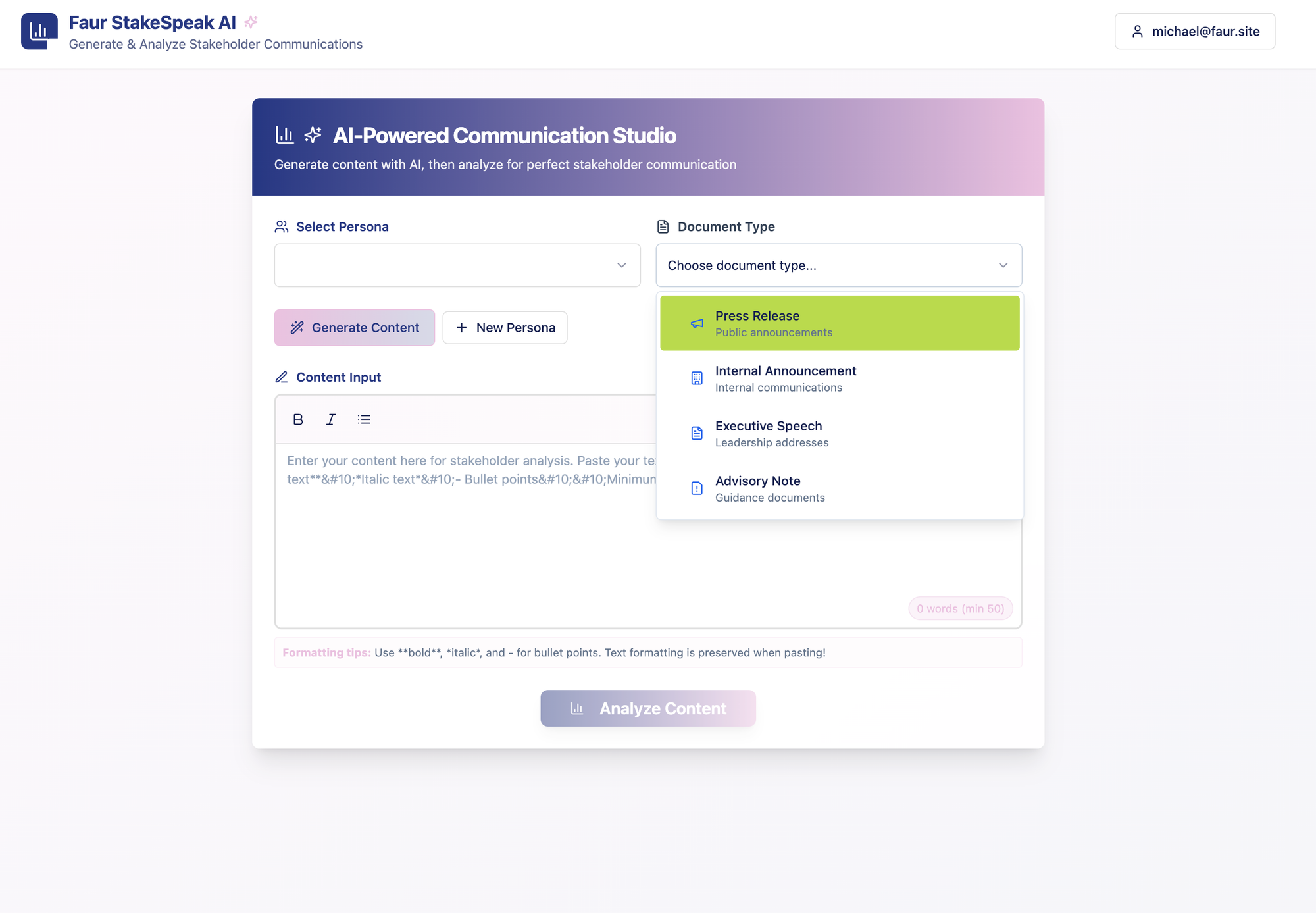

15:15: Fixed. Basic UI now working, with dropdowns for the basic personas and document types.

- 16:05: Discovered Lovable’s “Implement this plan” feature. It outlines your build, then executes at a click. This is a revelation for non-coders.

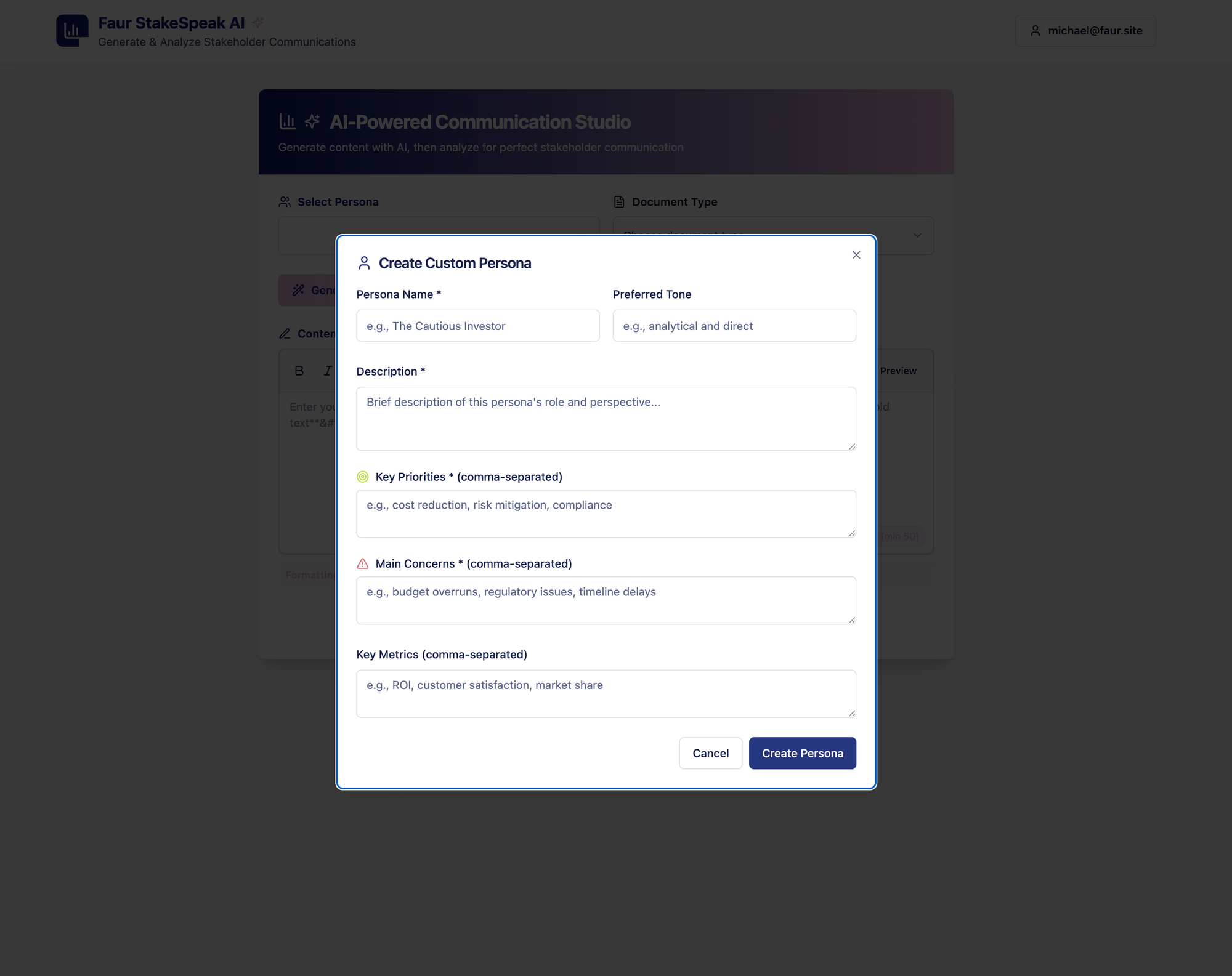

- 16:19: Sidetracked by OpenAI API setup – namely, how on earth to set up the OpenAI API. Thankfully, it was all relatively straightforward, with Lovable pointing to the right place to do so. After this set up Supabase, which would allow me to provide in, so users can build and save their own custom personas. Felt suspiciously simple to do so.

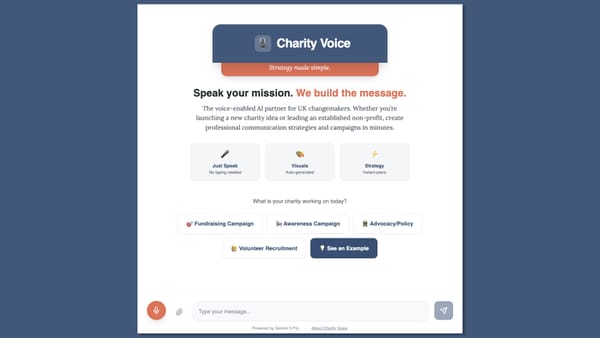

- 16:24: It’s alive! First version previewed at comms-persona-preview.lovable.app (note: this link now previews and shows the latest version!).

Lesson: Even with AI’s help, the boring stuff – API keys, import errors – still eats time.

Hour 2: Features and Discoveries

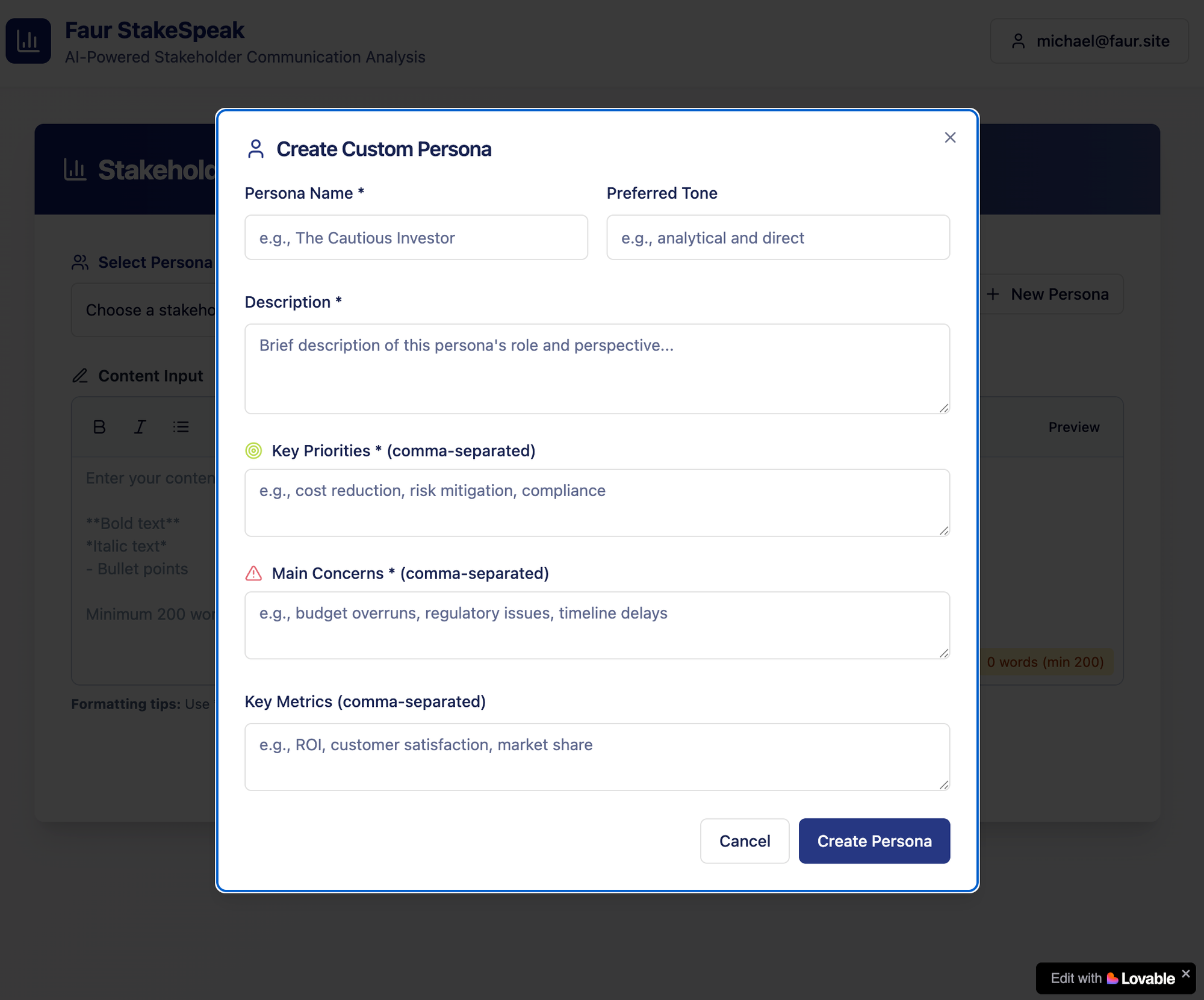

16:32 - Created a custom Keir Starmer persona using Claude – hey, you can dream big! Then hit my Claude Pro usage limit (as a side note, any other Pro users finding they’re quickly hitting their daily limit?), so switched to ChatGPT to generate a test press release.

16:45 - First major bug: custom personas crashed the app. Blank page of death.

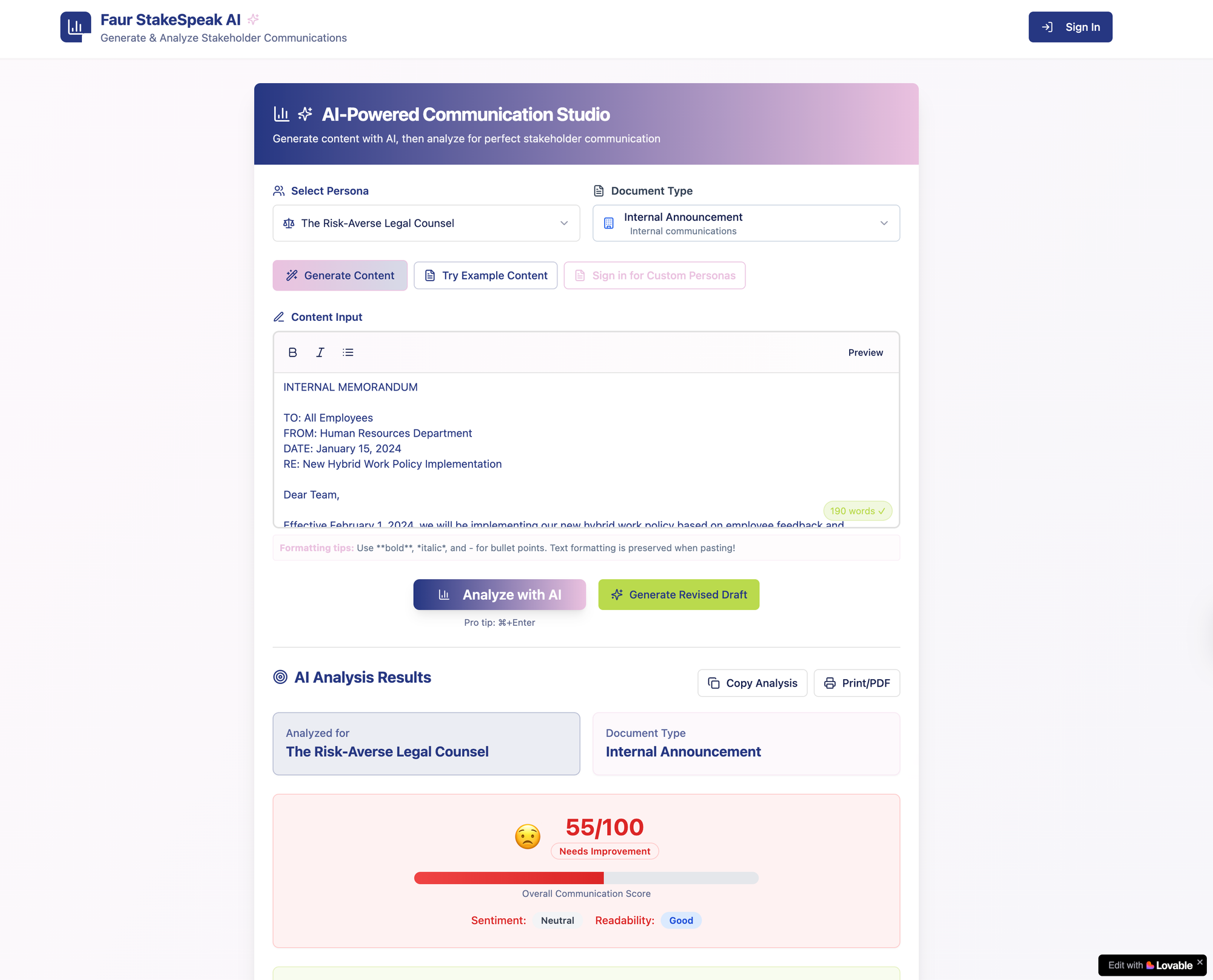

17:19 - Added content generation feature, so that users could quickly get inspiration rather than staring at a blank text box. Interface felt cluttered. Asked Lovable to clean it up and add Faur branding.

Lesson: AI tools can build fast, but not always right. Testing is essential.

Here is where I was after about one and a half hours, when I stopped for the day:

Hour 2.5: The "Everything is Fine" Bug

Here's where it got interesting, as I picked up the app-building on a new day. I purposely wrote a terrible press release - full of jargon, buried lead, zero news value. The app gave it 95%.

The discovery: The app wasn't actually analysing anything. OpenAI was returning markdown-formatted JSON, the parsing was failing, so everything defaulted to a generic 75% score.

The lesson: A working UI doesn't mean working functionality. Always test with intentionally bad inputs.

Hour 3: The Final Sprint

Fixed the JSON parsing issue, even though I’m still pretty unsure about what that is. Suddenly lousy content was scoring 10-20%. A success at spotting duds!

Final 15 minutes - Threw seven quick improvements at Lovable, because why the hell not. Having resumed on a different day, I asked Claude what it thought could be improved, and decided to go with each of its suggestions:

- Sample content button

- Copy results functionality

- Keyboard shortcuts (Cmd+Enter)

- Visual score indicators with emojis

- Print-friendly styles

- Footer credits

- Welcome modal

Lovable implemented all seven simultaneously – hitting a few errors but debugging itself as it went – and completing this final major task within a few minutes, which felt vaguely miraculous.

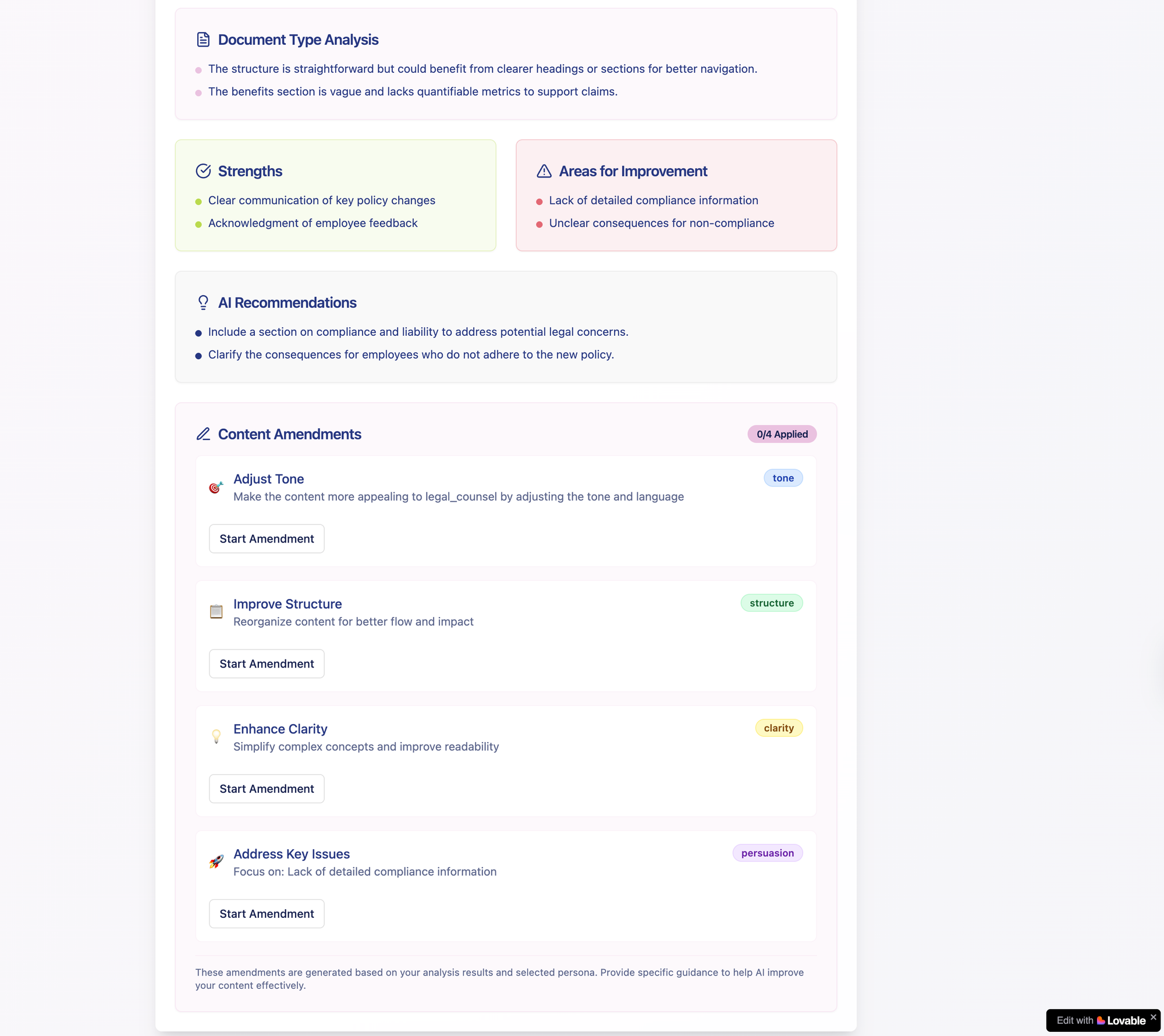

04:52 - CSS syntax error. Fixed in 35 seconds. 05:06 - Replaced "Follow-up Questions" with an "Amendments" feature, so the app actually modifies the user’s content based on feedback. 05:10 - Made amendments optional and smart (changes tone, structure, clarity based on persona).

First Test: Does It Actually Work?

Scenario: Drafting an announcement about an office relocation (a classic comms headache).

- Traditional approach: 2–3 hours of rewrites and stakeholder reviews (potentially days depending on how pernickety the stakeholders are); risk of missing key objections.

- With the tool: 5 minutes to test across all personas. The Employee Rep persona instantly flagged staff impact messaging gaps; Legal flagged consultation requirements I’d missed.

Result: Not perfect, but surprisingly useful for catching obvious missteps before the human review stage, so an undoubted time-saver.

What We've Learned (So Far)

About AI in comms:

- AI can convincingly simulate different stakeholder perspectives (at least for first-pass review)

- The best insights come from testing content across multiple personas

- Generated “amendments” are often surprisingly actionable

About building tools:

- "What's missing?" prompted to AI can generate comprehensive improvement plans

- Visual feedback (scores, emojis) makes abstract analysis concrete

- Big bugs can hide in the gap between “it looks like it works” and “it actually works”

What I Would Change

Immediate priorities:

- Stakeholder Showdown: Compare all personas side-by-side.

- Export reports: Download feedback and scores.

- Team sharing: Share reviews internally.

Bigger questions this raises:

- How much should we trust AI to simulate human reactions?

- Could “pre-testing” with AI risk making comms too cautious?

- Will it kill authenticity if everyone optimises for “no objections”?

The Wrap-Up

Let’s be honest: This will never replace the years of nuanced, political stakeholder judgement that experienced comms professionals bring. It won’t catch every subtlety, or predict the totally irrational responses.

But for catching those “should’ve seen it coming” moments? For giving junior team members a safety net? For that late-night or crisis statement, when you can’t get instant stakeholder input? This is genuinely useful.

The real revelation isn't the tool itself - it's that a comms professional with no coding experience can build something this functional in three hours. The barriers between "I wish this existed" and "I built this" are crumbling.

Try it yourself: https://comms-persona-preview.lovable.app/

Found this useful? Subscribe – if you haven’t already – and please forward this to a colleague who may be facing a similar challenge.

Applied Comms AI is powered by Faur. We help organisations navigate the intersection of communications and technology.