AI in Crisis Comms: Help or Hindrance?

"The key is preparation. Use AI now to understand its capabilities and limitations. Build your prompts library when you're calm. Test on low-stakes issues. Because when a real crisis hits, you don't want to be experimenting – you want proven tools and workflows ready to deploy." - Amanda Coleman

Even in today’s comically unpredictable landscape, crisis communications remains the ultimate high-stakes industry challenge: one where minutes can determine reputation survival and precise wording requires a level of surgical precision.

A swathe of swanky-sounding AI tools have entered the marketing, promising revolutionary speed and scale. However, they come with significant risks: such as the notorious hallucinations that introduce falsehoods, over-alerting that creates noise, or sensitive data handling that creates compliance nightmares.

For the discerning comms professional, we wanted to take a closer look at each stage of crisis comms, to see where AI may produce tangible practical benefits – and also when and how to remain cautious and make considered choices.

What follows is a playbook for using AI as a potential force multiplier while maintaining human judgment at critical decision points, one which can guide us at this time and point the way to future opportunities.

To help us navigate this landscape, we're joined by Amanda Coleman, one of the UK's leading crisis communication experts.

With over two decades managing communications for Greater Manchester Police – including some of the UK's most challenging incidents – Amanda literally wrote the book on crisis communications (three of them, in fact). Now Director of Amanda Coleman Communication Ltd, she advises organisations internationally on crisis preparedness and response. As a Chartered PR practitioner and Fellow of both CIPR and PRCA, Amanda brings hard-won frontline experience to the AI conversation.

Step 1: Early Signals & Monitoring

Machine learning has powered social listening for years, through tools such as Brandwatch, Talkwalker, and Meltwater: AI arguably just makes it faster, cheaper, and available to teams without enterprise budgets.

Where AI helps

- Volume processing: Monitor 10,000+ mentions daily vs 100s manually - catch issues hiding in the noise

- Pattern recognition: Spots unusual comment velocity (50 complaints in an hour vs normal 5) before humans notice

- Multi-language alerts: Real-time translation means that viral TikTok in Portuguese doesn't blindside you tomorrow

Where it hinders

- False positives, sarcasm/irony blind spots

- Missing private/low-visibility channels (CS tickets, WhatsApp, internal chat)

Amanda's view: “Using AI for social media monitoring should be in place and is almost an entry level way of getting comfortable with the technology and what it can do. But it can also be subject to hallucinations which can derail the approach by putting the focus in the wrong place.

“Where it gets really interesting is predictive analytics – AI can spot a problem when it's starting to emerge, but as we get more sophisticated, we should be able to have alerts that the conditions exist for a problem or crisis to develop. This will allow swift action and possible mitigation, which elevates the risk management processes.”

- Try this for free: Set up Google Alerts and Talkwalker Alerts for: "[Your brand]" OR "[CEO name]" AND (crisis OR complaint OR boycott OR outage)"

Step 2: Situation Assessment

The promise here is tantalising: turn information chaos into clear briefings in seconds, not hours. But here, hallucinations and contradictions can be especially troublesome.

Where AI helps

- Source synthesis: Combine 20 news articles, 50 tweets, and 5 internal emails into one coherent timeline

- Fact extraction: Pull key data points (numbers affected, locations, times) from walls of text within seconds

- Contradiction flagging: Can highlight discrepancies, such as when your PR says "resolved" but customer service says "ongoing"

Where it hinders

- Papering over contradictions; hallucinating links; missing one-off critical facts

Amanda's view: “AI doesn't always access the latest information, and needs to be brought in house to avoid any confidentiality concerns.

“It could be used to assess your messaging and whether it's aligned to brand values and is within any legal and regulatory boundaries. But be aware of the challenge of biases creeping into the systems. AI, like people, is not infallible.”

Experiment with this prompt (public info only):

You're a crisis communications manager conducting rapid situation assessment.

Your role is to create a clear, factual briefing for senior leadership.

Summarise the following sources into a 200-word SITREP with these sections:

- CONFIRMED FACTS - Include source for each

- UNCONFIRMED REPORTS - Label as "UNCONFIRMED:"

- STAKEHOLDERS AFFECTED - List all groups impacted

- SPREAD/SCALE - Geographic reach, platforms affected, volume of complaints

- IMMEDIATE RISKS - What could escalate in next 2-4 hours

Additional instructions:

- Flag any contradictions between sources with "CONTRADICTION:"

- Use bullet points for clarity

- Include timestamps where available

- Highlight any regulatory/legal mentions

- Note if any media outlets are covering this

Sources to analyse:

[Paste PUBLIC links/excerpts only – no personal data]

- Compliance note: If personal data is involved, don’t paste it into public tools. Use enterprise LLMs with a DPA, or anonymise. Check out ‘UK ICO guidance: AI fairness/transparency and when to run a DPIA’.

Step 3: Strategy & Message Development

This is where AI walks a tightrope: fast drafting is invaluable, but one tone-deaf statement can torpedo trust permanently.

Where AI helps

- Speed drafting: Generate 5 holding statement options in 2 minutes vs 30 minutes manually

- Tone calibration: Test if your apology sounds defensive ("we regret any inconvenience") vs genuine

- Completeness checking: Flags missing elements (no timeline for fix, no contact for questions)

Where it hinders

- Robotic phrasing; unintended legal admissions; cultural tone-deafness

Amanda's view: “Studies recently show 69 per cent of people can't tell what is real and what is not. This is the issue for approaches and messaging. We have to make sure that it is clear it is human and has empathy. Having the right prompt will provide a better outcome but without it can be wide of what is needed.

“Also, messaging isn't a process as in if you do X and Y then Z will happen. This makes it vital to put the people into the message and strategy development. There's a lack of understanding of people's requirements – who are you speaking to and how can you frame the messages accordingly?”

Step 4: Stakeholder Engagement

Different audiences, same truth: AI can help you translate without contradicting yourself, if you're careful about the data you feed it.

Where AI helps

- Message versioning: Turn one core statement into eight variants (investors, staff, customers, media) maintaining consistency

- Concern prediction: Maps likely questions per group (staff: "are jobs safe?", investors: "financial impact?")

- Channel matching: Suggests format per audience (bullets for internal email, paragraph for press)

Where it hinders

- Privacy/compliance risk if you upload personal data; over-segmentation causing inconsistency

Safe use: Provide roles/job titles only (no names/emails). Ask for an influence × concerns × channel × cadence grid and a one-page engagement plan. (If you must process personal data, do it inside your CRM or an enterprise LLM instance; see ICO.) (ICO)

Amanda's view: “Have this data and information available as part of crisis planning. Don't wait until something happens and then turn to AI. It is only as good as the data it accesses. Internal comms staff will always know more about the employees than AI can. Can it understand changes in human behaviour that are identified in some of the recent research on crisis messaging? It often will default to what is known rather than identify the changes.”

Step 5: Execution & Channel Management

The nightmare scenario: your LinkedIn says one thing, an internal employee engagement email says another, and someone screenshots both.

Where AI helps

- Platform optimisation: Adapts your 500-word statement for internal comms, Instagram carousels, LinkedIn posts

- Timing coordination: Calculates optimal posting sequence across time zones and platform peak times

- Link/tag QC: Checks all handles are correct, links work, hashtags aren't hijacked

Where it hinders

- Auto-visuals that feel glib; over-automation can lead to generic, cold, and contradictory copy

Amanda's view: "Your approach will be scrutinised – remember American Airlines' statement reused by Air India. If there is a lack of detail to shape the statement and actions then it may not be appropriate to the situation or could become like wallpaper because all statements say the same. Although I would argue that a lot of crisis communication has already gone that way with people writing statements!"

Step 6: Monitoring & Feedback Loop

Once your response lands, you need to know if it's working. AI can process the firehose of feedback, but can it understand what it means?

Where AI helps

- Response velocity: Analyse 1,000 comments in 5 minutes vs 5 hours manually

- Theme clustering: Groups similar complaints ("can't log in", "lost access", "account locked" = authentication issue)

- Escalation flagging: Identifies high-risk responses (legal threats, media interest, influencer anger)

Where it hinders

- Sentiment ≠ truth (irony/sarcasm); volume can drown out influence

Amanda's view: “Ultimately, you have to know who you are speaking to, what they think of you before the crisis, and then you can see what impact it has had and whether the communication has worked. Often, we don't have the right data and information to review our effectiveness."

Step 7: Review & Learning

After the dust settles, exhausted teams need to capture lessons before memory fades. AI never forgets, but can it truly learn?

Where AI helps

- Meeting efficiency: 90-minute debrief becomes 20-page transcript with 1-page summary

- Action extraction: Pulls out all commitments ("Sarah will update the crisis manual by Friday")

- Pattern analysis: Can quickly compare this crisis to previous ones: what issues keep recurring?

Where it hinders

- Over-simplifies organisational dynamics; misses emotional/cultural context

Amanda's view: “AI can analyse the feedback and data from people during the crisis. However, it may miss the nuances that people gather from a debrief. It needs to include more context.

“Also, can it really take you from a point made on the minutes through to the actions that are needed without understanding the history of the organisation and the crisis being faced?"

Verdict

Working hypothesis: from current practical use, it feels as though AI can do approximately 30% of the workload brilliantly (monitoring, clustering, drafting options, triage). However, the remaining 70% stays human: judgement, empathy, trade-offs, politics, and accountability – elements where nuance and authenticity are crucial.

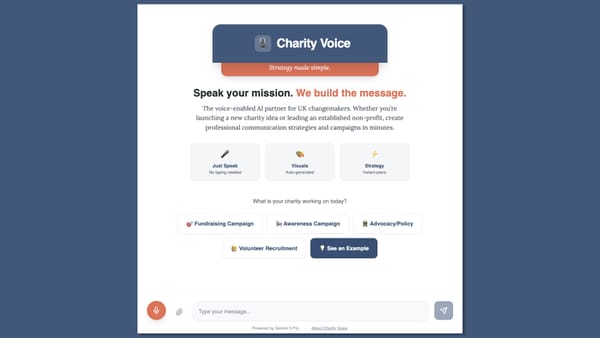

Amanda agrees with this assessment but adds a crucial caveat: "The key is preparation. Use AI now to understand its capabilities and limitations. Build your prompts library when you're calm. Test on low-stakes issues. Because when a real crisis hits, you don't want to be experimenting – you want proven tools and workflows ready to deploy."

Your Crisis-AI Starter Pack

Set up now:

- Talkwalker Alerts + Google Alerts for brand/execs/products + risk terms. (talkwalker.com, Google)

- One drafting LLM (Claude or ChatGPT) + a tiny library of approved prompts.

- Otter.ai or Fireflies for debrief capture → summary → action items. (help.otter.ai, Fireflies.ai)

- 5 prompts to save: SITREP (Step 2) • Statement red-team (Step 3) • Stakeholder grid (Step 4) • Comment triage (Step 6) • Debrief summary (Step 7)

Quarterly fire-drill (90 mins): Also, pick a real but minor complaint to conduct a simulation. Run all seven steps above. Time everything. Note what broke. Fix it before you need it for real.

Quick GDPR/ICO Checklist (UK)

✅ Never paste personal data into public AI tools

✅ If processing personal data, use enterprise tools with a DPA (or anonymise)

✅ Run a DPIA for any new AI crisis workflow

✅ Keep an audit trail: who used what, when, for what purpose

✅ “It was AI” isn’t a defence — you are accountable (ICO)

I hope you’ve found some of the above useful. Do let me know your thoughts, and it would be great to get your feedback on use cases: what are you testing for crisis preparedness? What worked (or backfired)? Reply with your experiments: we’ll feature the best lessons in the next issue.

- For comprehensive crisis communication expertise, Amanda Coleman's books and consultancy services, visit amandacolemancomms.co.uk.