Prompt Engineering: Our Production Standards

Prompt engineering sounds sophisticated, but it's really just learning to ask AI systems better questions. Our approach focuses on what actually works for communications professionals.

Real Scenarios, Real Constraints

We produce and refine prompts on genuine communications tasks: press releases that need writing, crisis statements that need crafting, briefing documents that need structuring.

We test with different AI models. A prompt that works beautifully with ChatGPT might fail dismally with Claude or Gemini, and the effectiveness can also vary depending on the model. We'll be specific about what we're using.

Our Independent Approach

We don't take payment for featuring specific prompts or techniques. Everything we share comes from our own experimentation or reader submissions we've verified independently.

When we adapt prompts from other sources, we properly credit them – clearly explaining the changes we made and the reasons behind them.

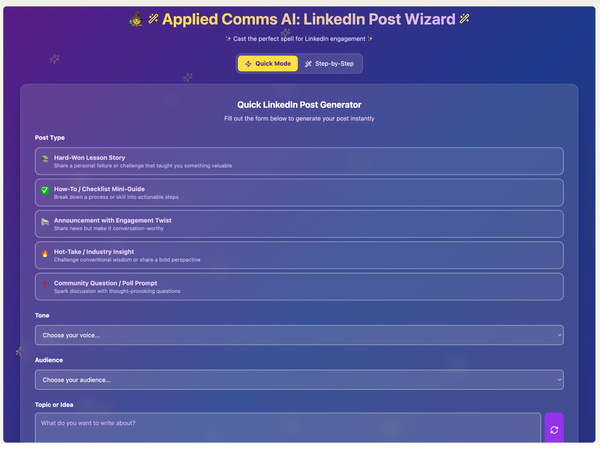

What We Share

- Working prompts, not perfect ones. We prefer prompts that work 80% of the time to ones that work perfectly 20% of the time.

- Context matters. Every prompt comes with guidance on when to use it, what to expect, and where it typically fails.

- Version history. We show how prompts evolve through testing. The fifth iteration is usually better than the first, and we'll explain what we learned along the way.

- Failure modes. What happens when these prompts go wrong? How can you spot when the AI is struggling? What are the warning signs?

The Reality Check

Prompt engineering is useful, but it's not a magic solution. Most communications challenges can't be solved by finding the perfect way to ask an AI a question.

We'll always be honest about when human expertise is irreplaceable and when AI assistance genuinely helps. The best prompt is often the simplest one that accomplishes the task.